By Dzianis Lukashevich, Director of Platforms and Solutions, Analog Devices

At the heart of Industry 4.0 is the concept to collect enormous volumes of data from sensors, actuators and the like, described as “big data”. This requires a detailed virtual view of machines, systems and processes, to be able to generate valuable and actionable information throughout the entire value chain. With data-processing systems and architectures becoming more complex, and with the number of data-generating devices constantly increasing, the question is how to best extract the most relevant, high-quality and useful information, or “smart data”.

Collecting data and storing it in the cloud for future analysis and use is the prevalent school of thought, but not a particularly effective one. Most data remains unused, and finding a solution later on becomes more complex and costly. The best way is to make conceptual considerations early on, to determine the most application-relevant information and where in the data flow to extract it from; see Figure 1. In effect, this means refining the data, to make smart data out of big data for the entire processing chain.

At application level, a decision can already be made regarding which AI algorithms have a high probability of success for the individual processing steps. This depends on boundary conditions such as available data, application type, available sensor modalities and background information about the lower-level physical processes.

For the individual processing steps, correct handling and interpretation of the data are extremely important, which largely depends on the application and the relevance and accuracy of the sensors’ data. Many parameters have a direct effect on the desired information, such as temporal behaviour, sensor multi-dependencies, and more. For complex tasks, simple threshold values and manually-determined logic are no longer sufficient or do not allow automated adaptation to changing environmental conditions.

Figure 1: Division of the algorithm pipeline into embedded, edge and cloud platforms

The overall data-processing chain with all the algorithms needed in each individual step must be implemented at all levels to generate best value; in most cases it is more advantageous to implement them as close as possible to the sensor. Through this, data is compressed and refined early on, and communication and storage costs are reduced. In addition, by early extraction of essential information from the data, the development of global algorithms at the higher levels becomes less complex. In most cases, algorithms from the streaming analytics area are also useful for avoiding unnecessary storage of data and, thus, high data transfer and storage costs. These algorithms use data points only once; for example, the complete information is extracted directly, without having to store it.

Embedded platform for condition-based monitoring

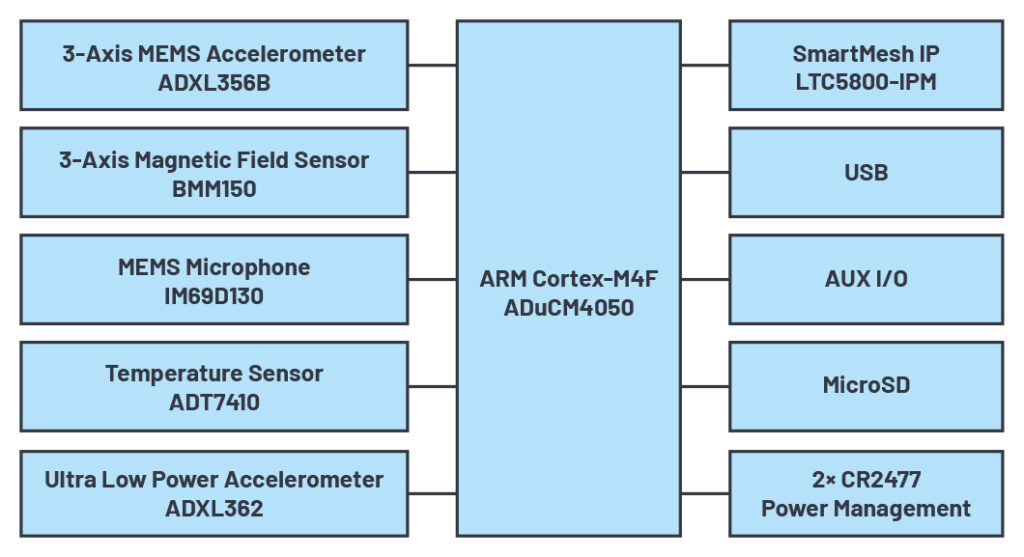

The ARM Cortex-M4F processor-based open embedded platform iCOMOX from Shiratech Solutions, Arrow and Analog Devices integrates power management with sensors and peripheral devices for data acquisition, processing, control and connectivity. The solution is perfect for local data processing and its early refinement with AI algorithms.

iCOMOX stands for intelligent condition monitoring box (Figure 2), easily applied to the industrial world of structural-health and machine-condition monitoring based on vibration, magnetic fields, sound and temperature analysis. The platform can be customised with additional sensor capabilites, such as gyroscopes. The iCOMOX’s AI methods enable a better estimate of machine status through multi-sensor data fusion, classifying operating and fault conditions with greater granularity and higher probability.

Figure 2: iCOMOX block diagram

For wireless communication, the iCOMOX provides a solution with high reliability and robustness as well as extremely low power consumption. The SmartMesh IP network is made of a highly-scaleable, self-forming/optimising multi-hop mesh of wireless nodes that collect and relay data. A network manager monitors and manages the network performance and security and exchanges data with the host application. The intelligent routing of the SmartMesh IP network determines an optimum path for each individual packet in consideration of the connection quality, the schedule for each packet transaction, and the number of multi-hops in the communication link.

Especially for wireless battery-operated condition-monitoring systems, embedded AI can help extract the full added value. Local conversion of sensor data to smart data by iCOMOX results in a lower data flow and consequently less power consumption than is the case with direct transmission of raw sensor data to the edge or the cloud.

The iCOMOX and its AI algorithms can be applied to monitoring machines, systems, structures and processes – extending from detection of anomalies to complex fault diagnostics and immediate initiation of fault elimination. If behaviour models are available for certain damages, these damages can even be predicted. Maintenance measures can be taken at an early stage and thus avoid unnecessary damage-based failure. If no predictive model exists, the embedded platform can enable successive learning of a machine’s behavior over time, to derive a comprehensive model for its predictive maintenance. In addition, the iCOMOX can be used to optimise the complex manufacturing processes to achieve a higher yield or better product quality.

Embedded AI algorithms for smart sensors

With data processing by AI algorithms, automated analysis is even possible for complex sensor data. Through this, the desired information – and, thus, added value – are automatically arrived at from the data along the data processing chain. Selection of an algorithm often depends on existing knowledge about the application. If extensive domain knowledge is available, AI plays a more supporting role and the algorithms used are quite rudimentary. If no expert knowledge exists, the algorithms can be much more complex. In many cases, it is the application that defines the hardware and, through this, the limitations for the algorithms.

For the model building, which is always a part of an AI algorithm, there are basically two different approaches: data-driven and model-based.

If only data but no background information that could be described in the form of mathematical equations are available, then so-called data-driven approaches must be chosen. These algorithms extract the desired information (smart data) directly from the sensor data (big data). They encompass the full range of machine-learning methods, including linear regression, neural networks, random forest and hidden Markov models.

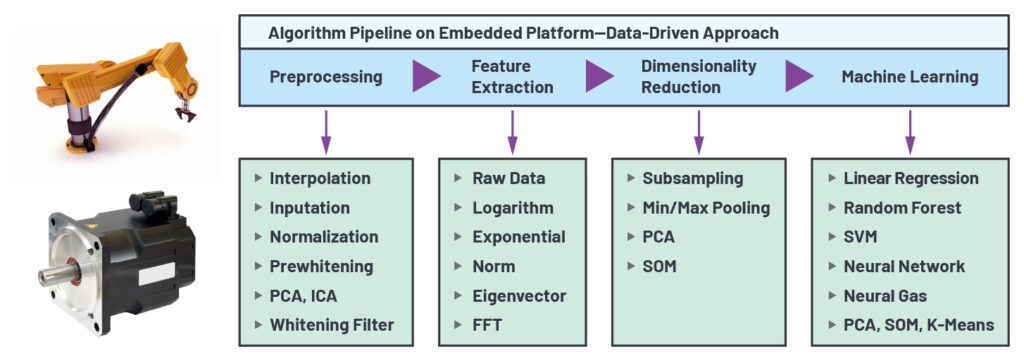

A typical algorithm pipeline for data-driven approaches that can be implemented in the iCOMOX consist of three components: (1) data preprocessing, (2) feature extraction and dimensionality reduction, and (3) the actual machine learning algorithm; see Figure 3.

Figure 3: Data-driven approaches for embedded platforms

During pre-processing, downstream algorithms, especially the machine-learning algorithms, converge the data to an optimum solution within the shortest possible time. Missing data must thereby be replaced using simple interpolation methods, in reference to the time dependence and interdependence between different sensor data. Furthermore, the data is modified by pre-whitening algorithms in such a way that they appear to be mutually independent. As a result, there are no more linear dependencies in time series or between sensors. Principal component analysis (PCA), independent component analysis (ICA) and so-called “whitening filters” are typical algorithms used in pre-whitening.

During feature extraction, characteristics, also known as features, are derived from the pre-processed data. This part of the processing chain strongly depends on the actual application. Due to the limited computing power of embedded platforms, it is not yet possible here to implement computationally-intensive, fully-automated algorithms that evaluate the various features and use specific optimisation criteria to find the best features – genetic algorithms would be included here. Rather, for embedded platforms such as the iCOMOX that have low power consumption, the method used for extracting features must be specified manually for each individual application. The possible methods include transforming data into the frequency domain (fast Fourier transformation), applying a logarithm to the raw sensor data, normalising the accelerometer or gyroscope data, finding the largest eigen vectors in PCA, or performing other calculations on the raw sensor data. Different algorithms for feature extraction can also be selected for different sensors. A large feature vector containing all the relevant features from all of the sensors is then obtained.

If the vector’s dimensionality exceeds a certain size, it must be reduced through dimensionality-reduction algorithms. The minimum and/or maximum values within a certain window can simply be taken, or more complex algorithms such as PCA or self-organising maps (SOM) can be used.

Only after the complete pre-processing of the data and the extraction of features relevant to the respective application can the machine-learning algorithms be employed to extract different information on the embedded platform. As was the case for feature extraction, the selection of the machine-learning algorithm strongly depends on the application. Fully-automated selection of the optimum learning algorithm – for example, via genetic algorithms – is also not possible due to the limited computing power.

However, even somewhat more complex neural networks, including the training phase, can be implemented on embedded platforms such as iCOMOX. The decisive factor here is the limited available memory. For this reason, the machine-learning algorithms, as well as the other algorithms, must be modified to directly process the sensor data.

Each data point is used only once; for example, all of the relevant information is extracted directly, and the memory-intensive collection of large amounts of data and the associated high data transfer and storage costs are eliminated. This type of processing is also known as “streaming analytics”.

Dynamic pose estimation with model-based approaches

Another fundamentally different approach is modelling by means of formulas and explicit relationships between the sensor data and the desired information. These approaches require the availability of physical background information or system behaviour in the form of a mathematical description. Such model-based approaches combine sensor data with background information, to yield a more precise result for the desired information. Some of the best-known examples include the Kalman filter (KF) for linear systems and the unscented Kalman filter (UKF), the extended Kalman filter (EKF) and particle filter (PF) for non-linear systems. The selection of the filter strongly depends on the respective application.

A typical algorithm pipeline for model-based approaches that can be implemented on embedded platforms such as the iCOMOX are composed of three parts: (1) outlier detection, (2) prediction step and (3) filtering step; see Figure 4.

Figure 4: Model-based approaches for embedded platforms

During outlier detection, sensor data far removed from the system condition’s estimate is either fractionally weighted or taken out completely in further processing, achieving more robust data processing.

In the prediction step, the current system condition is updated over time with a probabilistic system model that describes a prediction of the future system condition. This model is often derived from a deterministic system equation that describes the dependence of the future system condition on the current system condition, as well as other input parameters and disturbances. In condition monitoring of an industrial robot for example, this would be the dynamic equation for its individual arms, which only allow certain directions of motion at any point in time.

In the filtering step, the predicted system condition is then processed with a given measurement and the condition estimate thereby updated. There is a measurement equation equivalent to the system equation that allows the relationship between the system condition and the measurement to be described in a formula.

Combination of the data-driven and model-based approaches is both conceivable and advantageous for certain applications. The parameters of the underlying models for the model-based approaches can, for example, be determined through the data-driven approaches or dynamically adapted to the respective environmental condition. In addition, the system condition from the model-based approach can be part of a feature vector for the data-driven approaches. However, all of this strongly depends on the application.

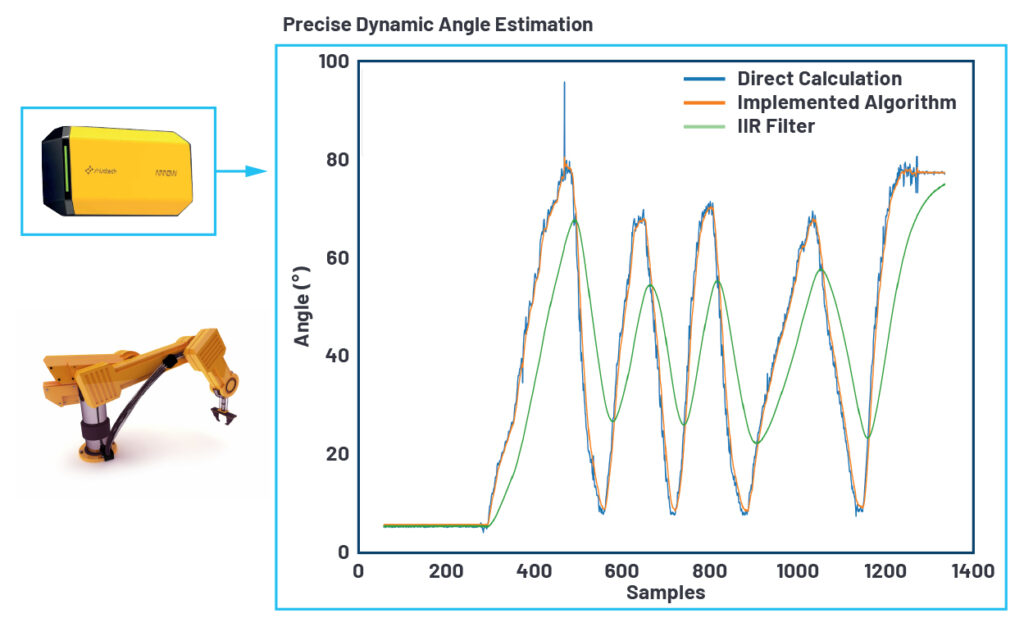

The previously-mentioned algorithm pipeline was implemented on the iCOMOX and evaluated for precise dynamic pose estimation in an industrial robot end effector. Accelerometer and gyroscope data with a sampling rate of 200Hz each were used as input data. The iCOMOX was attached to the end effector of the robot and its pose – consisting of position and orientation – determined; see Figure 5. As shown, the direct calculation leads to very fast reactions, but also to a lot of noise with many outliers. An IIR filter (commonly used in practice) leads to a very smooth signal, but it very poorly follows the true pose. In contrast, the algorithms presented here lead to a very smooth signal, where the estimated pose precisely and dynamically follows the motion of the robot’s end effector.

Figure 5: Precise dynamic angle estimation on an embedded platform. The implemented algorithm showed much better performance when compared to the direct calculation and IIR filtering

Self-reliance

Ideally, through the corresponding local data analysis, the AI algorithms should also be able to decide themselves what sensors and algorithms are relevant for the application; i.e., this is smart scaleability of the platform. At present it is still the engineer who must find the best algorithm for each application, even though the AI algorithms can already be scaled with minimal effort for various applications for machine-condition and structural-health monitoring.

The embedded AI should also make a decision regarding the quality of the data and, if it is inadequate, find and make the optimal settings for the sensors and the entire signal processing chain. If several different sensor modalities are used for the fusion, the weaknesses and disadvantages of certain sensors and methods can be compensated for with an AI algorithm. Through this, data quality and system reliability are increased. If a sensor is classified as “not-” or “not very relevant” to the application by the AI algorithm, its data flow can be throttled.

The open embedded platform iCOMOX contains a free software development kit and example projects for hardware and software for accelerating prototype creation, facilitating development and realising ideas. A robust and reliable wireless mesh network of smart sensors for condition-based monitoring can be created using multi-sensor data fusion and embedded AI. With it, big data is turned into smart data locally.