By Noah Madinger, Colorado Electronic Product Design (CEPD)

Developing electronic designs is loaded with challenges – from system performance expectations to schedule and budgetary limits. A design approach that can help juggle these successfully begins with the hardware.

A hardware architecture that combines microcontrollers with field programmable gate arrays is known as a “co-processor architecture”, and with the strengths of both processor technologies it enables the embedded designer to meet demanding requirements, with added flexibility to address other challenges, too. The co-processor architecture blends the development speed and performance of an MCU with the flexibility of an FPGA. With further optimisations and performance enhancements offered at every development step, the co-processor architecture can meet the needs of even the most challenging requirements – both for today’s and future designs.

Strenths

One common application for FPGA designs is to interface directly with a high-speed analogue-to-digital converter (ADC). The signal is digitised, read into the FPGA, DSP algorithms applied to it, upon which the FPGA makes a decision what to do with it.

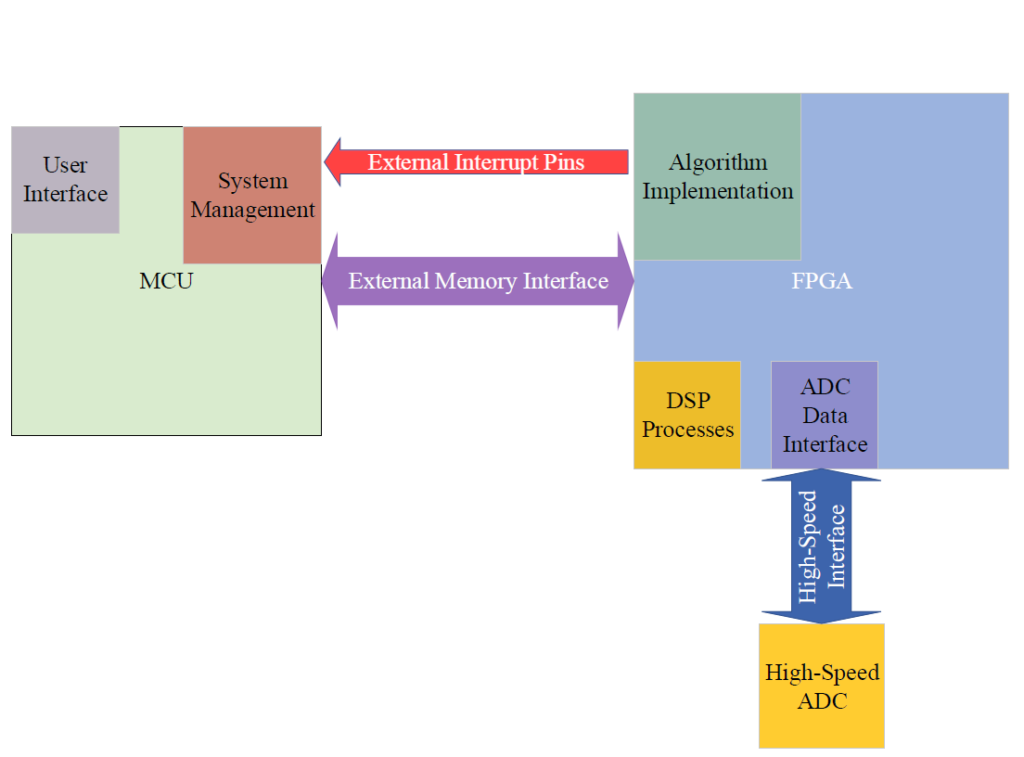

Figure 1 shows a generic co-processor architecture, where the MCU connects to the FPGA through its external memory interface; i.e, the FPGA is treated as if it were a piece of external static random-access memory (SRAM). The FPGA feeds signals to the MCU, which serve as hardware interrupt lines and status indicators, allowing the FPGA to indicate critical states to the MCU, such as communicating that an ADC conversion is ready, there’s a fault, or something else.

Figure 1: Generic co-processor diagram

Figure 2: DPS and MCU architecture

DSP and MCU

In the first development stage, the MCU is at the centre of the DSP and MCU architecture; see Figure 2. All things being equal, MCU- and executable-software development is less resource- and time-consuming than the development of FPGAs and the hardware descriptive language (HDL). Thus, by initiating product development with the MCU as primary processor, algorithms can quickly be implemented, tested and validated. This also allows algorithmic and logical bugs to be discovered early in the design process, with the opportunity to test and validate substantial portions of the signal chain.

In this setup, the FPGA’s role is to serve as a high-speed data-gathering interface. Its task is to reliably pipe data from the high-speed ADC, alert the MCU to that data and present it to its external memory interface. Although this role does not include implementing HDL-based DSP processes or other algorithms, it is nonetheless highly critical. The FPGA development performed in this phase lays the foundation for the product’s ultimate success, both within product development and upon its market release. By focusing on just the low-level interface, adequate time can be dedicated to testing these essential operations.

In this scenario, the full signal path (amplifications, attenuations, conversions, etc.) is tested and validated, with project development time and effort reduced by initially implementing the algorithms in software (C/C++). The lessons learned from this implementation will directly be transferable to HDL implementations with software-to-HDL tools, such as Xilinx’s Vivado HLS.

Second development stage

Figure 3: The architecture of system management with a microcontroller

The co-processor approach offers a second development stage, defined by moving the DSP processes and algorithm implementations from the MCU on to the FPGA. The FPGA is still responsible for the high-speed ADC interface, however, by assuming these other roles, the speed and parallelism offered by the FPGA are maximised – and, unlike the MCU, multiple instances of DSP processes and algorithm channels can be implemented and run simultaneously.

Tools, such as Vivado HLS, can provide the functional translation from executable C/C++ code to synthesisable HDL. Timing constraints, process parameters and other user preferences must still be defined and implemented, however, the core functionality is persevered and translated to the FPGA fabric.

Here, the MCU’s role is that of a system manage – monitoring, updating and reporting the FPGA’s status and control registers. The MCU also manages the user interface (UI), which could be anything from a web server accessed over the Ethernet or Wi-Fi, to an industrial touchscreen interface. Effectively, in this setup the MCU is relieved from computationally-intensive processing tasks, with both the MCU and FPGA lending their strongest suits to the tasks: the FPGA provides fast, parallel execution of DSP processes and algorithm implementations, whereas the MCU provides a responsive and streamlined UI and manages the product’s processes.

Product release

Both the MCU and FPGA are field-updateable devices. Several advancements have been made to make FPGA updates just as accessible as software updates. Moreover, since the FPGA is within the MCU’s addressable memory space, the MCU can serve as the access point for the entire system, receiving updates for both processors, which can be conditionally scheduled, distributed or customised by the user.

Last of all, since user and use-case logs record build implementations, system performance can be refined and enhanced, even after the product is in the field. This total-system updateability is most pronounced in space-based applications, where system maintenance and updates are performed remotely – from changing logical conditions to complicated updates of the comms modulation schemes. The programmability offered by the FPGA and co-processor architecture can accommodate an entire range of capabilities.

This hardware setup can also continue to bring system cost reductions. For example, during field deployments, it may be discovered that the product can operate just as well with a cheaper MCU or lower-performance FPGA. Furthermore, should a component become unavailable or obsolete, the architecture allows for new components to be integrated into the design, which is not possible with some architectures, such as a single-chip, system-on-a-chip (SoC) architecture, or a high-performance DSP or MCU that handle all the product’s processing. The co-processor approach is a good mix of capability and flexibility, giving the designer more choices and freedom in the development stages and upon its release to the market.

Satellite communications example

Simply put, the value of a co-processor is to offload the primary processing unit so that tasks are executed in hardware, in which accelerations and streamlining can be maximised. The advantage here is increase in computational speed and performance, yet with a reduction in development time and cost – compelling arguments for space communications systems.

In the“FPGA–based hardware as coprocessor”, authors G. Prasad and N. Vasantha explain how data processing within an FPGA blends the computational needs of satellite communications systems without the high non-recurring engineering (NRE) costs of application-specific integrated circuits (ASICs) or the application-specific limitations of a hard-architecture processor.

Just as described earlier, their design begins with the application processor performing the majority of computationally-intensive algorithms. From this starting point, they identify the key sections of software that consume most of the CPU’s clock cycles, and migrate these over to HDL implementation. The graphical representation is highly similar to what has been presented so far, however, they have chosen to represent the Application Program as an independent block, to be realised either in the host (processor) or in the FPGA-based hardware.

Figure 4: Application program, host processor and FPGA-based hardware used in a satellite communications setup

Peripherals’ performance is dramatically increased by using a peripheral component interconnect (PCI) interface and the host-processor’s direct memory access (DMA). This was most obvious in the de-randomisation process, performed by the host processor’s software. There was a bottleneck in the real-time response of the system, but when moved to the FPGA, the situation improved:

- De-randomisation was executed in real-time without bottlenecks;

- The host processor’s computational overhead was significantly reduced, and it better performed a desired logging role.

- The entire system performance was much improved.

Automotive infotainment example

Sophisticated infotainment systems in cars are customer-required feature – these systems are highly visible and are expected to provide great functionality and exceptional performance. To address these, signal processing and wireless communications are a must.

Delphi Delco Electronics Systems’s automotive entertainment architecture uses a Renesas MCU, SH-4, with a companion ASIC, Hitachi’s HD64404 Amanda. The architecture satisfies over 75% of the automotive market’s baseline entertainment functionalities, but it does not process video or offers wireless communication. These were resolved by adding an FPGA in the existing architecture and design.

Figure 5: Infotainment FPGA co-processor architecture, example 1

The architecture shown in Figure 5 is suitable for both video processing and wireless-communication management. By pushing the DSP functionalities on to the FPGA, the Amanda processor can act as a system manager, free from having to implement a wireless communications stack. Since both the Amanda and FPGA have access to external memory, data can rapidly be exchanged between the processors and components.

Figure 6: Infotainment FPGA co-processor architecture, example 2

The second infotainment setup, shown in Figure 6, highlights the FPGA’s ability to address both the incoming high-speed analogue data, and the handling of compression and encoding needed for video applications. In fact, all of this functionality can be pushed onto the FPGA, addressed in real time through parallel processing.

By including an FPGA within an existing hardware architecture, the proven performance of the existing hardware can be coupled with flexibility and future-proofing. Even within existing systems, the co-processor architecture provides options to designers, which would otherwise not be available.

The advantage of rapid prototyping

Rapid prototyping executes tasks in parallel, and quickly identifies bugs and design problems. It also validates data and signal paths, especially those within a project’s critical path. However, for this process to truly produce streamlined, efficient results they should be handled by sufficient, relevant expertise, such as that of hardware, embedded software, DSP or HDL engineer. Although nowadays there are plenty of interdisciplinary professionals who can tackle one or more of these tasks, there will still be substantial project overhead when it comes to coordinating all those efforts.

The authors of the paper “An FPGA–based rapid prototyping platform for wavelet co–processors” state that the co-processor architecture allows a single DSP engineer to fulfill all these roles, efficiently and effectively. For the study the team designed and simulated a DSP functionality within the MATLAB Simulink tool, to: (1) verify the performance through simulation; and (2) to act as a reference for future designs.

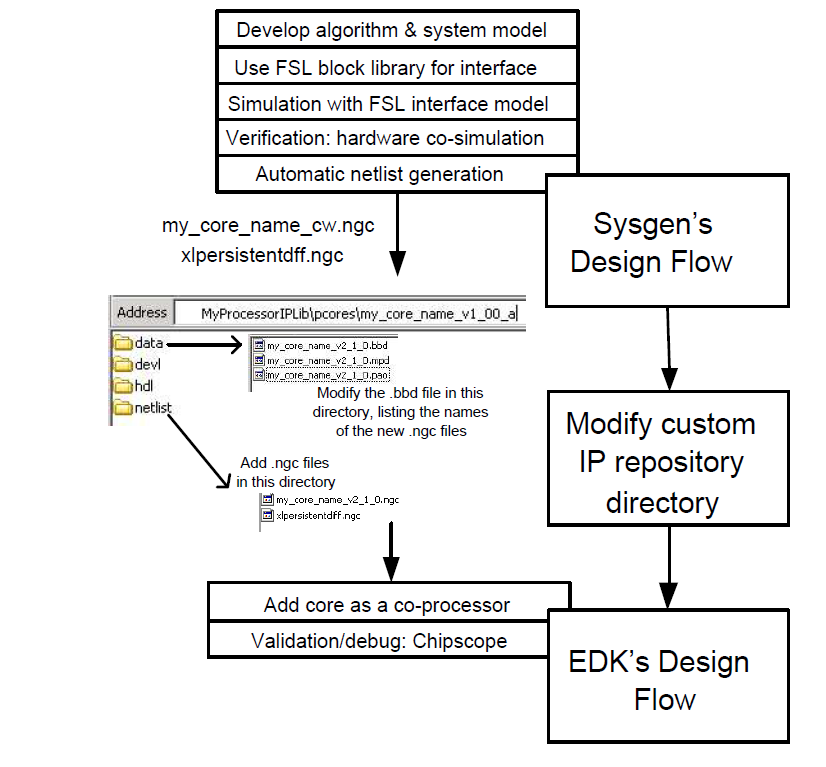

Post-simulation, critical functionalities were identified and divided into different cores – these are soft-core components and processors that can be synthesised within an FPGA. The most important step here was to define the interface among these cores and components, and to compare the data-exchange performance against the desired, simulated performance. This design process closely aligned with Xilinx’s design flow for embedded systems; see Figure 7.

Figure 7: Design flow implementation

By dividing the system into synthesisable cores, the DSP engineer can focus on the most critical aspects of the signal processing chain, without needing hardware or HDL expertise to modify, route or implement different soft-core processors or components within the FPGA. With an awareness of the data formats and interfaces, the engineer has full control over the signal paths and the system-performance refinement.

Discrete cosine transform case study

Empirical findings not only confirm the flexibility of the co-processor architecture, but also highlight the performance-enhancing options attained through modern FPGA tools.

In this example, a discrete cosine transform (DCT) was selected as a computationally-intensive algorithm, since this algorithm is used in digital-signal processing for pattern recognition and filtering. The progression of the algorithm from a C-based implementation to an HDL-based implementation was at the heart of the findings, using the Vivado HLS v2019 tools.

Beginning with the C-based implementation, the DCT algorithm accepts two arrays of 16-bit numbers: array “a” is the input array to the DCT, and array “b” is the output array from the DCT. The data width (DW) is therefore defined as 16, and the number of elements within the arrays (N) is 1024/DW, or 64. The size of the DCT matrix (DCT_SIZE) is set to 8, which defines it as an 8 x 8 matrix.

Although an important consideration, the validation process places functionality at a higher weighting than execution time, which is allowed, since the ultimate implementation of this algorithm will be in an FPGA, where hardware acceleration, loop unrolling and other techniques are readily available.

Figure 8: Xilinx Vivado HLS design flow

Once the DCT code was created within the Vivado HLS tool, the next step was to synthesise the design for FPGA implementation. It is at this step where some of the most impactful benefits from moving the algorithm’s execution from an MCU to an FPGA become more apparent – as a reference, this step is equivalent to the ‘system management through a microcontroller’ setup, asdescribed earlier. Modern FPGA tools allow for a suite of optimisations and enhancements that greatly enhance the performance of complex algorithms.

Before analysing the results, there are some important terms to bear in mind:

- Latency – The number of clock cycles required to execute all iterations of the loop;

- Interval – The number of clock cycles before the next iteration of a loop starts to process data;

- Block random access memory (BRAM);

- DSP48E – Digital signal processing slice for the UltraScale architecture;

- Flipflop (FF);

- Look-up table (LUT);

- Unified Random-Access Memory (URAM) – which can be a single transistor.

Default

The default optimisation setting comes from the unaltered result of translating the C-based algorithm to synthesisable HDL. No optimisations are enabled, and this can be used as a performance reference to better understand the other optimisations.

Pipeline Inner Loop

The PIPELINE directive instructs Vivado HLS to unroll the inner loops so that new data can start being processed with the existing one still in the pipeline. Thus, new data does not have to wait for the existing data to be complete before processing can begin.

Pipeline Outer Loop

Applying the PIPELINEdirective to the outer loop makes it pipelined. However, the inner loops’ operations now occur concurrently. Both the latency and interval time are cut in half through applying this directly to the outer loop.

Array Partitiion

This directive maps the contents of the loops to arrays and thus flattens all of the memory access to single elements within these arrays. Hence, more RAM is consumed, but again, the execution time of this algorithm is halved.

Dataflow

This directive allows the designer to specify the target number of clock cycles between each input read. This directive is only supported for top-level function. Only loops and functions exposed to this level will benefit from this directive.

Inline

The INLINE directive flattens all loops, both inner and outer. Both row and column processes can now execute concurrently. The number of required clock cycles is kept to a minimum, even if this consumes more FPGA resources.