By Steve Mensor, VP Marketing, Achronix

Since the introduction of FPGAs several decades ago, each new architecture has continued to use a bitwise routing structure. While this approach has been successful, the rise of high-speed communication standards has required ever-increasing on-chip bus widths to be able to support these new data rates. A consequence of this limitation is that designers often spend much of their development time trying to achieve timing closure and sacrificing performance in order to place and route their design.

Traditional FPGA routing is based on many individual segments that run horizontally and vertically throughout the FPGA with switch boxes at the intersections of their routes to connect paths. A path from any source to any destination on the FPGA can be made with these segments and switch boxes. This uniform structure of FPGA routing enables extreme flexibility in implementing any logic function, for any data path width within the FPGA fabric. However, the downside is that each segment adds delay to the signal paths, made worse by long distance paths, slowing the overall performance of the task.

Another challenge with bitwise routing is congestion, which requires signal paths to be re-routed around a blockage, which can incur more delays and cause the performance to degrade even further.

New architectures

New architectures are changing these problems. One new solution involves a high-speed 2D network on chip (NoC) built on top of the traditional segmented-FPGA routing structure. Internally, the NoC consists of an array of rows and columns that distribute network traffic horizontally and vertically throughout the FPGA. There are master and slave NoC Access Points (NAPs) at the locations where each row and column of the NoC meet, acting as either a source or a destination between the NoC and the programmable logic. This solution is very beneficial to designs, since it reduces FPGA lookup-table (LUT) usage, offering higher performance, which in turn enhances productivity.

In traditional FPGA architectures, writing and reading to and from external memory requires data to travel through a long, segmented routing path. This restriction limits bandwidth and consumes routing resources that might be needed by the application.

The NoC transmits data from an external source to the FPGA and memory significantly easier than traditional FPGA architectures. The NoC augments the conventional programmable interconnect within the FPGA array, acting like a superhighway network superimposed over a city street system. Whilst the standard FPGA’s conventional, programmable-interconnect matrix continues to work well for slower, local data traffic, the NoC handles the more challenging, high-speed data flows.

In modern FPGAs, designers who need to connect high-speed interfaces to read or write to external memory must consider the delays created by connecting through logic and routing within the FPGA, as well as the placement of the input and output signals. Building a memory interface often consumes significant time during the design cycle, even if just to generate basic functionality.

Partial reconfiguration

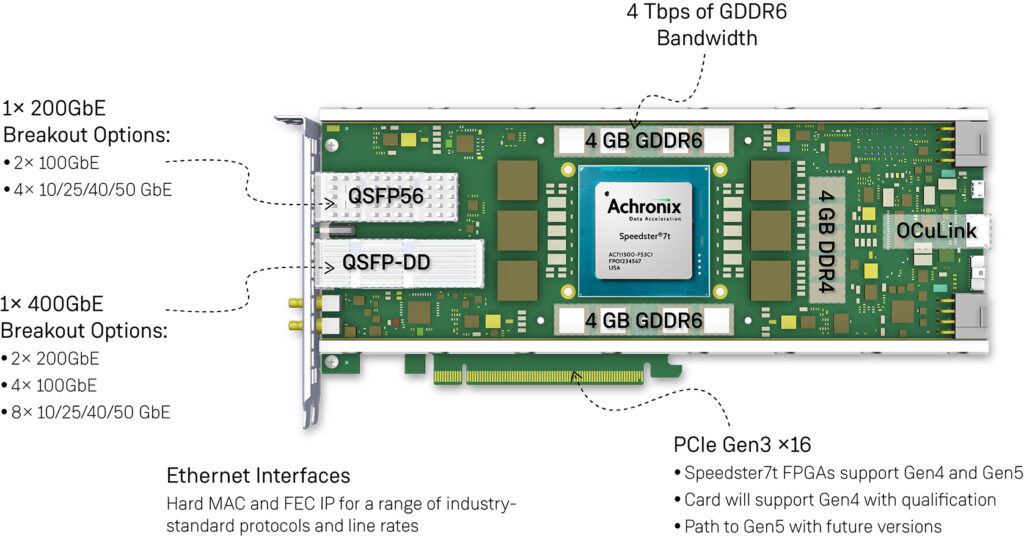

NoCs are available for certain read or write transactions prior to device configuration: PCIe to GDDR6, PCIe to DDR4 and, lastly, PCIe to FPGA Configuration Unit (FCU). Once the PCIe interface is set up, the FPGA can receive configuration bitstreams via the PCIe interface destined for the FCU to configure the rest of the device. After the device is configured, designers can reconfigure portions of the FPGA (partial reconfiguration) to add new functionality or increase acceleration performance without shutting down the FPGA. New partial reconfiguration bitstreams can be sent through the PCIe interface to the FCU to reconfigure any part of the device.

When part of the device is reconfigured, any data coming into or out of the newly-configured region can easily be accessed by instantiating a NAP in the desired area. The NoC removes the complexity of traditional FPGA partial reconfiguration; the user no longer has to worry about routing around existing logic functions and impacting performance, or not being able to access certain device pins due to existing logic in that region. This capability saves time and provides increased flexibility when using partial reconfiguration.

Additionally, partial reconfiguration allows adapting the logic within a device as workloads change. For example, if the FPGA is performing a compression algorithm on incoming data and that compression is no longer needed, the host CPU can tell the FPGA to reconfigure, loading a new design optimised for the next workload.

Partial reconfiguration can be executed independently at the fabric cluster level, whilst the device is still operational. A clever way is to develop a self-aware FPGA through a soft CPU that monitors device operations, to initiate a partial reconfiguration in real time. This could be to turn off logic to save power or to add more accelerator blocks in the FPGA to temporarily handle a large increase in incoming data. These capabilities offer more configuration flexibility than ever before.

Hardware virtualisation

Using a NoC provides designers the unique ability to create virtualised, secure hardware within a single FPGA with the NAP and its AXI (Advanced eXtensible Interface). Connecting a programmable-logic design directly to the NoC requires little more than instantiating a NAP and its AXI4 into the logic design. Each NAP also has an associated Address Translation Table (ATT) that converts a NAP logical address to a NoC physical address. The ATTs permit programmable-logic blocks to use local addresses whilst mapping NoC-directed transactions to addresses assigned by the NoC’s global memory map. This feature can be used in many ways; for example, to allow all identical copies of an acceleration engine to use virtual, zero-based addressing, whilst sending traffic from each acceleration engine to a different physical memory location.

Each ATT entry also contains an access protection bit to preclude that node from accessing proscribed address ranges. This feature provides a significant inter-process security that prevents multiple, simultaneous applications or tasks that run on one FPGA from interfering with memory blocks assigned to other applications or tasks. This mechanism also helps prevent system crashes due to unintended, accidental or even intentional memory-address conflicts. Additionally, designers can prevent logic functions from accessing entire memory devices using this scheme.

Team-based design

Team-based design for FPGAs is not a new concept, but the underlying architecture and routing dependencies make it quite challenging. Once a portion of the design is finished by a team, a second team working on a new portion often experiences problems when accessing resources that need routing through the already-completed part. Equally, once routed, changing that region or its size impacts the rest of the FPGA.

With a NoC, design blocks can be mapped to any portion of the FPGA and undergo changes without affecting the timing, placement or routing of other FPGA blocks. Team-based design is made possible because all the NAPs in the FPGA allow each design block unrestricted access to the NoC for communication. Therefore, if a certain portion of a design grows in size, as long as there are enough FPGA resources available, the data flows are automatically managed by the NoC, freeing designers from the worry about meeting timing and possible follow-on impacts by other team members.

Another unique feature of the NoC is the ability to allow designers to configure and validate I/O connections independently from the user logic. For example, one design team can validate a PCIe-to-GDDR6 interface whilst another can independently validate internal logic functions. This separation is made possible because the peripheral portion of the NoC connects the PCIe, GDDR6, DDR4 and FCU without consuming any FPGA resources. These connections can be tested without HDL code, which allows for simultaneous and independent validation of interfaces and logic. The capability eliminates dependencies between validation steps, and allows for overall faster validation than with traditional FPGA architectures.

400Gbps Ethernet applications

The challenge with implementing high-speed, 400Gbps Ethernet data paths in FPGAs is to find a bus size that meets the performance capability of that FPGA. For 400G Ethernet, the only viable choices for full bandwidth operation are a 1,024-bit bus running at 724MHz, or a 2,048-bit bus running at 642MHz. Such wide buses are difficult to route because they consume a vast amount of logic resources within the FPGA, and create timing closure challenges at the required rates, even in the most advanced FPGAs.

However, designers can use a new processing mode called “packet mode” where an incoming Ethernet stream is rearranged into four narrower, 32-byte packets, or four independent 256-bit buses running at 506MHz. This saves on wasted bytes when the packet ends, and allows data to be streamed in parallel, without having to wait for the first packet to finish before starting the next transmission. The FPGA architecture is designed to enable packet mode by connecting the Ethernet MAC directly to specific NoC columns, and from the NoC columns to the fabric, using user-instantiated NAPs. Using the NoC column, data can be sent anywhere along that column into the FPGA fabric for further processing.

Packet mode is configured using FPGA design tools, which greatly simplifies the design and enables higher productivity when handling 400Gbps Ethernet data streams.

Reduced logic utilisation

NoCs automatically reduce the amount of logic needed for a given design. Instead of using the FPGA LUTs and inter-block routing, a design can use the NoC. The complexity of connecting design elements into the NoC is automatically managed by the FPGA design tools, so designers can speed up the design without having to write HDL code. This approach simplifies the time-consuming challenge of achieving timing closure without degrading overall application performance due to routing congestion within the FPGA. The NoC also enables more efficient use of the device, without sacrificing performance, and significantly increases the quantity of LUTs available for computation.

To highlight this benefit, at Achronix we recently created a sample design featuring a 2D input image convolution. Each uses a Machine-Learning Processor (MLP) and Blocks RAM (BRAM), with each MLP performing 12 int8 multiplications in a single cycle. Forty 2D convolution blocks were chained together to use nearly all available BRAM and MLP resources within the device. In total, the 40 instances of the 2D convolution example design were running in parallel and used 94% of the MLPs and 97% of the BRAM but just 8% of the LUTs. The remaining 92% of the available LUTs could still be used for other functions.

Fundamental shift

NoCs have enabled a fundamental shift in the FPGA design process. For the first time, a 2D NoC that connects all the system interfaces and FPGA fabric has been implemented. This new architecture has now allowed FPGAs to be uniquely suitable for high-bandwidth applications whilst significantly improving designer productivity. Because the NoC manages all the networking functions between data accelerators within the FPGA and the high-speed data interfaces, designers need only design their data accelerators and connect them to a NAP primitive. The design tools and the NoC take care of everything else!